The AGI Files: The Radical Ideology of Superintelligence

How human ideology will impact the creation of history's most important technology

Welcome to The AGI Files - a series of essays exploring superintelligence and its consequences.

Why write a series of essays on superintelligence?

Let’s start with an analogy: If you told me there was a chance of rain tomorrow where I live, I would carry an umbrella. If you told me there was a 1% chance tomorrow of deadly acid rain, I probably wouldn't step outside.

In short, humans often adjust their life in anticipation of impactful future events, even in the case of low probability events that are particularly impactful. However, strangely enough, very few people – even within the most tech-forward circles in Silicon Valley – spend enough time preparing for AGI, despite the fact that an AGI timeline is rapidly coming into focus and the impact of existing AI systems is increasingly measurable in society.

In my opinion, this is because the biggest changes in life are also the hardest to predict – and predicting AGI is a particularly hard and undefined challenge. But this doesn't mean we shouldn't try. I hope your presence indicates you are willing to try as well.

This essay in the AGI Files explores the emerging ideologies of AGI1 and how they will impact its creation. Historically, technology shifts are predated by strong emergent forces of human ideology. I will argue that the development of AGI in the US is being impacted by human ideology and will explore why this is consequential.

1 The Link Between Ideology and Technology

The creation of the atomic bomb is often cited as a potential analogy to the creation of AGI. The parallels, on a surface level, are compelling – they’re both impactful technological achievements, they were (or will be) built by accomplished research teams, and they’re both harbingers of doom or peace (depending who you ask). This analogy was actually the impetus for me thinking about the connection between human ideology and AGI – primarily because I think the comparison between the bomb and AGI succeeds and fails in misunderstood ways.

Let’s start by defining two ways to study technological change – P(Doom) and α(Ideology):

P(Doom) is a well-known term that represents the probability of doomsday. In this context, it measures how dangerous a given technological change could be, is, or was to the human race.

α(Ideology) is a term I’ve coined. In this context, it represents the strength or pervasiveness of an ideology predating a technological change.

These are obviously not the only two lenses under which you could study technological change, but I believe they are among the most important for studying AGI. It’s also worth noting that P(Doom) and α(Ideology) are both highly qualitative metrics.

If we focus these two lenses onto important technological creations throughout history, the connection between ideology and technology begins to emerge…

It's worth calling out - aside from the atomic bomb, bioweapons of mass destruction are the only known human technology in existence with a high P(Doom).

So, what conclusions can we draw from this road trip through history?

Conclusion #1: Human ideology can strongly influence the creation and acceleration of technology, and there is strong historical basis for this fact.

Conclusion #2: Humanity has yet to create a technology with a high value of P(Doom) and a high value of α(Ideology).

Conclusion #3: Historically, all high P(Doom) technologies have been created and owned by governments. Equivalently, the corporate sector/free market has never produced high P(Doom) technology.

Hypothesis: AGI appears to have the characteristics of the first ever technology with a high value of P(Doom)2 and a high value of α(Ideology), likely because it is being built through the corporate sector and not within the government.

Let’s test and explore this hypothesis.

2 The Emerging (and Powerful) Ideology of AGI

2.1 The Current Ideological Paradigm

The emerging ideologies of AGI, particularly if you measure their size by the number of existing acronyms, resemble something like a fast-multiplying, many-headed hydra. Stay on X long enough and it feels as if every time you learn an acronym, three new ones have sprouted up, rearing their ugly heads and demanding your attention.

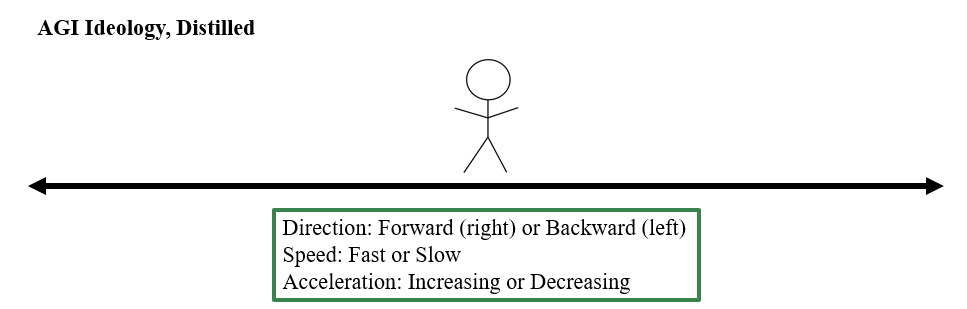

It's no longer so simple to represent AI ideology according to the effective altruism (EA) vs. effective accelerationism (E/ACC) two-party system. I prefer a slightly different heuristic where all emerging ideological approaches to AGI have a direction (forward or backward), speed (fast or slow), and acceleration (increasing or decreasing). Today, there are three primary categories of ideology – a desire to move forward faster, a desire to move forward slower, or a desire stay still or go backward. A few examples:

It's worth noting that while I strongly believe the three-way framework presented above is the best way to think about existing AGI ideologies, some of the more specific views straddle these categories, depending on the individual. For example, some individuals claim to be effective altruist through the lens of halting AI. Similarly, some transhumanists claim to be effective altruists. In short, it’s important to listen to what someone actually believes vs. simply taking a label or acronym at face value.

2.2 Processing the Current Ideological Paradigm

Perhaps the most immediately striking aspect of the current ideological paradigm for AGI is how prescriptive it is. In general, human ideology can be prescriptive, meaning it aims to guide behavior or thinking (e.g., the field of normative ethics investigates how one morally should act), or it can be descriptive, meaning it doesn’t espouse certain behaviors or actions, but simply tries to understand things without judgment (e.g. many branches of existentialism describe the human experience without prescribing how to live). You could argue that a sound descriptive basis is often necessary to be prescriptive (for example, how can you tell someone what to do if you don't know anything?).

It is interesting that AGI ideology is almost entirely prescriptive, and particularly strongly so. AGI ideology strongly seeks to influence its creation and is not satisfied with merely describing it. This underscores the particular influence of ideology on this technology, while also illustrating that we are seeking to change something that we don’t yet fully understand.

This is not too surprising – considering AGI’s potential of high P(Doom). Stakeholders want to get things right because the cost of getting things wrong is simply too high. Equivalently, we could say that fear is a significant motivating factor between the three groups. The “stay still or go backwards” crowd fears the dangers of AGI and sees no reasonable path to avoiding these dangers while creating AGI. The “move forward slower” crowd fears the dangers of certain kinds of AGI but believes we can carefully navigate a path towards a safe AGI. And the “move forward faster” crowd fears that the disadvantages (economic, geopolitical, regulatory, etc.) of moving slowly outweigh the risks of accelerating.

Unfortunately, we don’t have strong evidence to support any of these ideological claims today. This is because none of what any group fears has been meaningfully realized. Until then, we exist in an era of ideological speculation, which allows ideologies to run rampant and to possess fairly coherent supporting arguments. Of course, there are numerous smaller data points that ideologists will argue support their claims. For example, we could point to recent research demonstrating that smart agents tend to scheme/seek power or cite recent comments about AI-related layoffs (e.g., Salesforce and Meta) as evidence that we should slow down or halt AGI progress. Alternatively, we could cite China’s rapid frontier model progress and the historical importance of technology for economic/geopolitical hegemony as arguments that we need to accelerate.

2.3 Can AGI Ideology Influence AGI?

By this point, I’ve hopefully convinced you that AGI ideology exists. But now we need to ask –are there mechanisms for AGI ideology to impact its own creation? I would argue there are:

AI researchers can choose where they work based on ideological alignment, effectively voting with their time and research contributions.

AI customers – both consumer and enterprise – can vote with their wallet, giving capital to research labs that align with their individual ideology. (VCs also technically vote with their wallets but I don't give us much credit - I don't think we're a particularly ideological bunch).

While nascent, we might also point to regulators, who may produce AI legislation based on their (or their party's/administration's) ideological beliefs.

Thus, unlike secret government projects like the Manhattan Project or bioweaponry, AGI is more readily influenceable by a broader ideological public (n.b., I use "broader" on a relative basis – the mechanisms for influencing AGI clearly do not resemble a true democracy). This is not necessarily a good thing by the way (to be explored later in this essay).

2.4 Will AGI Ideology Influence AGI?

We now have two conclusions – that AGI ideology exists AND there exist mechanisms for these ideologies to influence the creation of AGI. But still, this does not guarantee that AGI ideology WILL influence AGI.

Other factors may have a much stronger effect – such as the laws of capitalism. For example, AI researchers may only choose where to work where they get paid the most, AI customers may only choose to use the cheapest or most performant models (n.b., any company experimenting with Chinese models are decidedly NOT ideologically motivated), AI research labs may shut down if their unit economics suck (looking at you, Anthropic), and regulators may be influenced by lobbying efforts from commercial interests.

I do believe that other effects, such as capitalism, are real – even extremely strong. But I wouldn't discount the effect of ideology either. Safety matters to many consumers and enterprises, and labs are pursuing radically different approaches to appeal to different types of users (consider Grok vs. Gemini). Furthermore, I also believe that AI researchers, perhaps more than any other group, profoundly care about AGI ideology, while also having one of the most important and impactful “votes.” I directly and indirectly know many researchers who have made career decisions or have switched labs (or were even fired) due to their ideological alignment, sometimes even at the expense of salary. This is not just an employee-led movement, it's also led by executives. Anthropic is well-known for vetting ideological alignment during the hiring process. Anecdotally, I have a friend who was recently denied a senior research position at xAI due to perceived ideological misalignment.

3 Implications and Consequences of a Radicalized AGI

So here we are…on the cusp of creating one of humanity’s most important and potentially dangerous technologies and doing so through a powerful ideological mechanism. Aside from historical novelty, what are the implications?

Potential division of AI talent: We do not exist in a world where we can take our smartest AI researchers, aggregate their talents into a single lab, and sprint at building AGI. This creates a division of best-in-class talent, likely slowing down the AGI timeline. Of course, there may be efficiency gains from this division of talent (because ideologically opposed people tend not to work well together, or because competition breeds excellence), but these efficiency gains are only because talent is already ideologically opposed in the first place.

Major research breakthroughs will be scattered, reducing competitive advantages: The potential division of AI talent will result in scattered research breakthroughs. Achieving AGI requires research advances in a multitude of domains, and since domain experts will be at multiple labs, we can expect independent breakthroughs at multiple labs (this has already played out many times in the last few years). Scattered breakthroughs make it more challenging for labs to create robust and consistent research advantages, limiting competitive edge. This also helps close the gap between open source and closed source models, which may have significant implications on the commercial viability of closed source.

Inefficient allocation of resources: With many labs shooting for AGI, increasingly scarce resources are being allocated to redundant tasks. Each independent lab needs to raise funding, collect data, hire researchers, invest into infrastructure and compute, use energy…the list goes on. Many experts believe the infrastructure and energy requirements required to create AGI alone will require a significant amount of investment relative to what exists today. Each research lab, assuming they stay in the game, effectively represents a significant stepwise increase in redundant resource requirements. Over time, I expect the resource allocation problem to become more pronounced and unwieldy.

Ideological division may may stymie geopolitical decision-making and stunt American geopolitical first mover advantage: While ideological division may create a healthy dialogue around what AGI should look like, it also can erode America’s geopolitical first move advantage by slowing down its creation. Authoritarian regimes like China are theoretically far better equipped in this regard to centralize talent, ignore ideological concerns, and produce AGI as rapidly as their research breakthroughs and infrastructure allow. AGI has the potential to be an enormous source of geopolitical + economic hegemony and America may be slowly relinquishing its lead.

Research lab ideological alignment may take on a political dimension: Just as other technological debates (big tech, regulation, etc.) have taken on a political dimension and found homes on opposing party lines, I anticipate AGI and its ideology to eventually take on a political dimension and align itself with our two-party system. I predict this will be a key development during the current Trump administration. In future administrations under different party leadership, we may see the U.S. flip-flop its stance and approach to AGI development to adhere to party ideology. I will even go out on a limb and predict that the Democrats will ultimately adopt a “stay still or go backwards” AGI platform. In addition to this resonating with their recent antagonism to big tech, it will appeal to anyone experiencing layoffs and may help them recapture their historically “pro-worker” platform.

It may be challenging to create AGI for the silent majority: Ideological polarization is a common phenomenon in systems with competing points of view, where the representatives of a viewpoint gravitate towards extreme positions rather than moderate stances, even when moderate stances are more inclusive of a populace. The obvious example is the U.S. two-party system. If AGI ideological polarizes further, it’s possible we will create an overly-radicalized version of a technology that caters to niche radicals vs. a more moderate majority.

Technology that impacts the national interest is exposed to capitalism and other effects: As mentioned, human ideology is not the only factor impacting the creation of AGI. To the extent that ideology is, or becomes, an incredibly desirable factor – we have to consider the possibility that ideologically desirable labs may be undermined by things like basic market forces. For example, ideologically desirable labs may not produce the best products, nor have commercially capable executives, nor attract enough venture funding.

4 Should Ideology Impact AGI?

This is the last important question to consider. Unfortunately, it doesn’t have a clear answer. The implications and consequences of a radicalized AGI are a mixed bag. What we gain from certain types of efficiency, ideological variability, and economic incentives can also be offset by certain types of inefficiency, division, or geopolitical risk. Many of the consequences listed in Section 3 are themselves double-edged swords – capable of producing both good and harm.

Another way to answer this question would be to consider the alternative – that AGI is created a la atomic bomb or bioweapons – i.e., by the government without the influence of ideology. While this is largely speculative, I believe this would reduce the influence of economics but vastly increase the influence of geopolitics on the technology. I believe that governments are more likely to consider safety risks and implement guardrails than commercially-motivated research labs, which of course would make many accelerationists unhappy and many cautious ideologists proud. I also believe that creating AGI through independent research labs is significantly more open and democratic than creating AGI in a government lab. It’s also worth mentioning that the US government and its agencies have not demonstrated the ability to keep up with technology, especially in recent years, and so it’s unclear they would even be capable of producing AGI under a similar timeline.

Of course, unless the US government intervenes and attempts to nationalize or pseudo-nationalize elements of AGI, this is currently not a possible path for American AGI. In other words, the question of should ideology impact AGI is largely rhetorical, as it seems, at this point, inevitable.

5 Parting Thought

I first pitched this essay to a friend of mine who leads a safety team at a well-known research lab. His response struck a chord. He said something to the effect of:

"The AGI ideologies we see today are young and probably naive. I have a feeling the most impactful ideologies around AGI have yet emerge."

He is 100% correct. This essay explores how human ideology can influence the creation of technology. However, it is also true that the creation of technology can produce powerful reactionary ideologies. Think of the ideological (and geopolitical) debate around the atomic bomb. Or consider the Luddites, the group of 19th-century textile workers who struggled against the automation created by the Industrial Revolution.

The concept of post-technology reactionary ideology and specifically the story of the Luddites are topics that deserve their own separate essay, which I will be sharing soon. Stay tuned!

I’ll refrain from providing an absolute definition of AGI. I don’t think it matters (at least for this essay) because individual definitions of AGI differ based on ideology – and here, my goal is to explore AGI ideologies holistically. I’ll just assume that AGI is a really smart and capable AI and leave it at that.

To quickly, but importantly, clarify – I am not an AI doomer. When I claim that AGI appears to have a high value of P(Doom), I merely claim that a significant number of people perceive it that way, or at least perceive it as having the potential to be catastrophic.